Welcome to the NTCIR-13 WWW Task!

Note: A new round of We Want Web task (WWW2) has already started. You may find more information on the NEW WEBSITE. If you are interested in the data of WWW1@NTCIR-13 for research purpose, you may contact the organizers for more information.

NTCIR-13 WWW is an ad hoc Web search task, which we plan to continue for at least three rounds (NTCIR 13-15). Information access tasks have diversified: currently there are various novel tracks/tasks at NTCIR, TREC, CLEF etc. This is in sharp contrast to the early TRECs where there were only a few tracks, where the ad hoc track was at the core. But is the ad hoc task a solved problem? It seems to us that researchers have moved on to new tasks not because they have completely solved the problem, but because they have reached a plateau. Ad hoc Web search, in particular, is still of utmost practical importance. We believe that IR researchers should continue to study and understand the core problems of ranked retrieval and advance the state of the art.

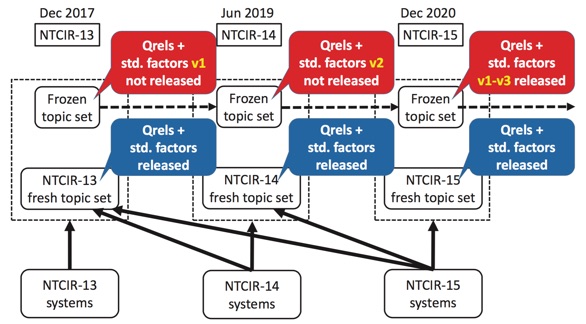

The main task of WWW is a traditional ad hoc task. We will also consider a session-based subtask. Pooling and graded relevance assessments will be conducted as usual. After releasing the evaluation results, we will work with participants to conduct an organized failure analysis, following the approach of the Reliable Information Access workshop. We believe that progress cannot be achieved if we just keep looking at mean performance scores. More information is provided in the following figure.

Tentative Schedule

- • Jul-Aug 2016 Corpora released to registered participants

- • Aug-Sep 2016 Designing and constructing topics

- • Oct 2016-Jan 2017 User behavior data collected for the topics

- • Feb-Mar 2017 User behavior data released to registered participants

- • Apr 2017 Task registration due

- • May 2017 Topics released; runs received

- • July 16, 2017 runs received

- • July-Aug 2017 Relevance assessments

- • Sep 1, 2017 Results and Draft Task overview released to participants

- • Oct 1, 2017 Participants’ draft papers due

- • Nov 1, 2017 All camera ready papers due

- • Nov 2017 Pre-NTCIR-13 WWW Workshop on Failure Analysis in Beijing

- • Dec 2017 NTCIR-13 Conference

Participation

Participants can…

- • Easily participate in this standard ad hoc task, and monitor improvements over 4.5 years (3 NTCIR rounds).

- • Leverage user behavior data + new Sogou corpus and query log

- • Discover what cannot be discovered in a single-site failure analysis

To participate in the 13th NTCIR WWW task, please read How to participate NTCIR-13 task and register via NTCIR-13 online registration page.

Organizers

- • Cheng Luo (Tsinghua University)

- • Tetsuya Sakai (Waseda University)

- • Yiqun Liu (Tsinghua University)

- • Zhicheng Dou (Renmin University of China)

- • Jingfang Xu (Sogou Inc.)

- • Chenyan Xiong (Carnegie Mellon University)

If any questions, feel free to contact the organizers by ntcirwww@list.waseda.jp !

Task Design

- • An ad hoc web search task, which we plan to continue for at least three rounds (NTCIR 13-15).

- • Some user behavior data will be provided to improve search quality.

- • Evaluation measures will also take into account user behavior, along with traditional ranked retrieval measures.

- • English and Chinese subtasks for NTCIR-13 (+Japanese for NTCIR-14)

- • Collective failure analysis at a pre-NTCIR-13 workshop

Data

Query Topics

- • We have 100 queries for Chinese/English subtasks. About 40 topics will be shared among different languages for possible future cross-language research purpose.

- • The Chinese queries are sampled from the median-frequency queries collected from Sogou search logs, while the English Queries are sampled from another international search engine’s logs.

- • The queries are organized in XML can be download here (Chinese, English)

Web Collection

- • For the Chinese Subtask, we adopt the new SogouT-16 as the document collection. SogouT-16 contains about 1.17B webpages, which are sampled from the index of Sogou. Considering that the original SogouT might be a little bit difficult to handle for some research groups (almost 80TB after decompression), we prepare a “Category B” version of SogouT-16, which is denoted as “SogouT-16 B”. This subset contains about 15% webpages of SogouT-16 and it will be applied as the Web Collection. We also provide free online retrieval services for free. This Web Collection is absolutely free for research purpose. You can apply online and then drop an email to Mr. Cheng Luo (luochengleo AT gmail.com) to get it. SogouT-16 has a free online retrieval/page rendering service. You will get an account after application for SogouT-16.

- • For the English Subtask, we adopt the ClueWeb12-B13 version as document collection. To obtain the ClueWeb12 corpus, you will have to sign an agreement first. This corpus is also free for research purpose. You only need to pay for the disks and the shipment. More information can be found at Clueweb-12’s homepage. If you have any question, please contact Mr. Chenyan Xiong (cx AT cs.cmu.edu). If you have already owned a permission of ClueWeb12, you can directly use your copy to start the task. ClueWeb-12 also has a free online retrieval/page rendering service, it can be utilized after the agreement is signed.

- • Note: You may feel it spends too much to apply for the document collections. Don’t worry! We have a much easier plan for you. For both subtasks, we have baseline systems (top 1000 documents for each query). For SogouT-16, you only need to sign an application forum online, we can send you the original docs. For Clueweb-12, you just need to sign an agreement with CMU and we can send you the original docs.

Baseline Runs

- • For Chinese Subtask, we have top 1000 results for each topic. These results were obtained by our retrieval system, which is based on Apache Solr with standard BM25 ranking function.

- • For English Susbtask, we also have top 1000 results for each topics. The results were retrieved by Indri, which is a famous open-source search engine.

- • The baselines are organized in standard TREC format and can be download here (Chinese/English).

User Behavior Collection

For the Chinese Subtask, we provide a user behavior collection for the participants. The behavior collection include 2 parts.

- • Training set: we have 200 queries. We provide users’ clicks, the URLs of presented results in Sogou’s query logs, and some relevance annotations. More specifically, for a specific item in training set. We have

{anonymized User ID} {query} {a list of URLs presented to the users} {clicked url}{some rel labels}

- • Test set: for the 100 queries used in the Chinese Subtask, we provide users’ clicks, and the URLs of presented results in Sogou’s query logs. The data is organized in a similar way as training set.

These user behavior were collected from Sogou’s search logs in 2016. Due to privacy concerns, the users’ IDs are anonymized.

If you want to use these data, please contact Mr. Cheng Luo (luochengleo AT gmail.com) for further details. At this moment, this data is limited to We Want Web’s participants.

Time to Obtain Data

- • For the Chinese corpus (SogouT) and user behavior dataset, we will first sign an agreement. After the agreement (soft copy) is received, we will send you the data. The delivery of disks takes about one week. The user behavior data can be send to you online separately .

- • For the English corpus (ClueWeb12), it take about 2-3 weeks for the application and delivery. You will find more details about the application procedure on the official website.

- • We suggest you to start the data application procedure as early as possible.

Submission

This submission instruction will be adopted in both Chinese and English Subtasks. The submissions must be compressed (zip). Each participating team can submit up to 5 runs for each subtask. In a run, please submit up to 100 documents. We may use a cutoff, e.g. 10, in evaluation.

All runs should be generated completely automatically. No manual run is allowed.

Run Names

Run files should be named as “<teamID>-{C,E}-{PU, CU, NU}-{Base, Own}-[priority].txt”

<teamID> is exactly the Team ID when you registered in NTCIR-12. You can contact the organizer if you forgot your team ID.

{C,E}: C for Chinese runs, E for English runs

{PU,CU,NU}: Runtypes

- • PU (presented URLs, without click info, are utilized for ranking)

- • CU (clicked URLs are utilized for ranking)

- • NU (no URLs are utilized for ranking)

{Base, Own}: all runs which are based on the provided baselines runs are Base runs; otherwise they are Own runs, i.e. the documents are retrieved by the ranking system constructed by yourself.

[priority]: Due to limited resources, we may not include all submitted runs in the result pool. Therefore, it is important for you to point out in which order we should take your runs into consideration for result pool construction. The priority should be between 1 to 5 and 1 is the highest priority.

e.g.

THUIR-C-CU-Base-1

Run Submissions format

For all runs in both subtasks, Line 1 of the submission file must be of the form:

<SYSDESC>[insert a short description in English here]</SYSDESC>

The rest of the file should contain lines of the form:

[TopicID] 0 [DocumentID] [Rank] [Score] [RunName]\n

At most 100 documents should be returned for each query topic.

For example, a run should look like this:

<SYSDESC>[insert a short description in English here]</SYSDESC>

0101 0 clueweb12-0006-97-23810 1 27.73 THUIR-C-CU-Base-1

0101 0 clueweb12-0009-08-98321 2 25.15 THUIR-C-CU-Base-1

0101 0 clueweb12-0003-71-19833 3 21.89 THUIR-C-CU-Base-1

0101 0 clueweb12-0002-66-03897 4 13.57 THUIR-C-CU-Base-1

……

The due for submission is July 16th, Japan time.

For each subtask, you may put all your runs in a zip file and submit it via Dropbox. The submission links are as following:

© copyright 2016-2017. All rights reserved by NTCIR-WWW organizers.