The TianGong-SS-FSD Dataset

We provide this Chinese-centric TianGong-SS-FSD dataset to support research on Web search evaluation. We conducted a field study which collected daily search logs and explicit feedbacks from 30 participants for one month. Moreover, we recruited nine external assessors to make 4-level graded relevance judgments for search results on the first page for each query in our field study.

Motivation

Search evaluation is one of the central concerns in information retrieval (IR) studies. Based on a user model that describes how users interact with the rank list, an evaluation metric is defined to link the relevance scores of a list of documents to an estimation of system effectiveness and user satisfaction. Therefore, the validity of an evaluation metric has two facets: whether the underlying user model can accurately predict user behavior and whether the evaluation metric correlates well with user satisfaction.

Log data from the commercial search engine can provide large-scale and practical search behavior data for researchers, while it is difficult to get explicit feedback (e.g. search satisfaction) from users directly. In lab studies, researchers collect users' feedback right after each designed search task. However, these studies are performed under a controlled environment and may not reflect users' true search intent or practical behavior patterns

In this work, we conducted a field study which collected daily search logs and explicit feedbacks from 30 participants for one month. This field study dataset can provide us with more accurate annotations and more practical search behavior data.

Dataset Description

This dataset contains two parts:

- User behavior data: We record the queries issued by the participants and corresponding SERPs and landing pages. Specifically, URLs and HTML content of aforementioned pages are recorded. Besides content data, participants’ mouse interactions such as mouse movement, clicks and scrolling and temporal information including dwell time on SERPs and landing pages are also recorded.

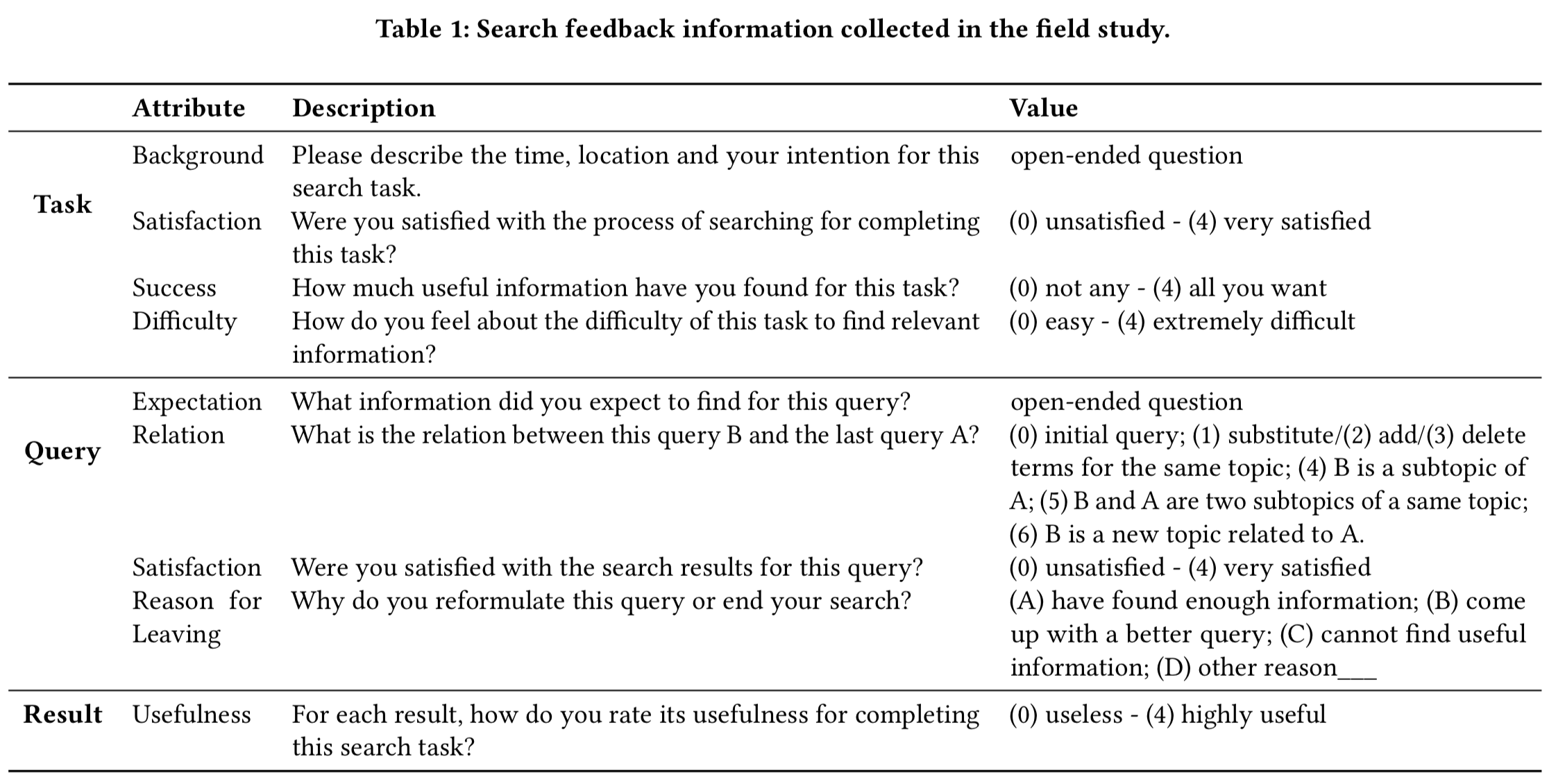

- User feedback data: The participants are asked to review their search history and provide feedbacks. To protect the privacy, they are allowed to freely discard any log that they are not willing to share with us. Since we seek to investigate the relationship between the accuracy of user models and correlation with user satisfaction at query level, we ask participants to provide query-level search satisfaction. We also ask them to provide underlying search goals which are used for further annotations. The overall search feedbacks we collected in the field study are shown in Table 1.

After filtering invalid data (parts of user behaviors or page contents are not recorded successfully), we obtain a field study dataset where 3,875 queries and corresponding user behavior and user feedback data are available. We also recruit nine external assessors to make 4-level graded relevance judgments for search results in our field study. For each “query-document” pair, assessors are provided with the underlying search goals (collected during the field study) for the query to help them better understand the information need behind the given query.

Dataset Organization

The dataset is organized hierarchically in a JSON format, as shown in the following.

[ -- All the sessions

{ -- Each session

"satisfaction": 4, -- Satisfaction of this session: 0(very unsatisfied)-4(very satisfied)

"user_id": "33",

"time_location": "2018年11月24日晚上,宿舍",

"ending_type": 3, -- Session search success: 0(not at all)-3(better than expected)

"other_reason": "", -- just ignore this information

"session_id": "693",

"information_difficulty": 0, -- How difficult the user felt to find useful information: 0(very simple)-4(very difficult)

"intent": "想要了解提名奖的含义", -- Intent of this session

"experience": 3, -- Session search experience: 0(very bad)-4(very good)

"background": "看到电影获奖情况后括附“提名”", -- Why did the user start this session

"query_difficulty": 0, -- How difficult the user felt to issue clear queries: 0(very simple)-4(very difficult)

"queries": [ -- All the queries of this session

{ -- Each query

"satisfaction": 4, -- Satisfaction of this query: 0(very unsatisfied)-4(very satisfied)

"start_timestamp": 1543058349687, -- The timestamp when user issued this query

"goal": "我认为最后的结果应当包括对提名奖的解释以及与正式的获奖的区别", -- The goal of this query user expected

"ending_type": 3, -- Query search success: 0(not at all)-3(better than expected)

"other_reason": "", -- just ignore this information

"query_id": "3175",

"query_string": "获奖和提名的区别", -- The content of this query

"relation": 0, -- What is the relation between this query B and the last query A? (See Table 1 for more details)

"NIT": 2, -- Query taxonomy: 1-Navigational; 2-Informational; 3-Transactional

"SERPs": [ -- All the SERPs of this query

{ -- Each SERP

"page_id": 1, -- page number

"serp_id": "37705",

"mouse_moves": [ -- All the mouse movements on this SERP

{ -- Each mouse movement

"Sy": 31, -- y coordinate of the start point

"Sx": 277, -- x coordinate of the start point

"Ty": "move", -- type of mouse movement: move or scroll

"St": 1543058350518, -- start timestamp

"Ey": 98, -- y coordinate of the end point

"Ex": 265, -- x coordinate of the end point

"Et": 1543058350677 -- end timestamp

},

... -- more mouse movements

],

"time_intervals": [ -- All the time intervals on this SERP

{ -- Each time interval

"inT": 1543058349687, -- timestamp when the user jump in

"outT": 1543058354636 -- timestamp when the user jump out

},

... -- more time intervals

],

"results": [ -- All the results on this SERP

{ -- Each result

"clicked": 1, -- Whether the result was clicked by the user

"type": "se_com_default", -- The organic or vertical type of the result

"top": 138.0, -- The y coordinate of the top of this result

"click_timestamp": 1543058355305, -- The timestamp when the user clicked this result (0 for unclicked result)

"click_dwell_time": 11202.0, -- The click dwell time of this result (0 for unclicked result)

"rank": 1, -- The rank of this result (start from 1)

"relevance": 1, -- The relevance judgment of this result: 0(irrelevant)-3(highly relevant)

"height": 189, -- The height of this result

"result_id": "1",

"usefulness": 4 -- The usefulness feedback of this result: 0(useless)-4(highly useful)

},

... -- more results

]

},

... -- more SERPs

]

},

... -- more queries

]

},

... -- more sessions

]

How to get TianGong-SS-FSD

We provide a demo of TianGong-SS-FSD which contains 2 sessions to help researchers have a quick start. For the whole copy of the TianGong-SS-FSD dataset, you need to contact with us (thuir_datamanage@126.com). After signing an application forum online, we can send you the data.

Citation

If you use TianGong-SS-FSD in your research, please add the following bibtex citation in your references. A preprint of this paper can be found here TianGong-SS-FSD.

@inproceedings{zhang2020models,

title={Models Versus Satisfaction: Towards a Better Understanding of Evaluation Metrics},

author={Fan Zhang, Jiaxin Mao, Yiqun Liu, Xiaohui Xie, Weizhi Ma, Min Zhang, and Shaoping Ma},

booktitle={Proceedings of the 43rd International ACM SIGIR Conference on Research and Development in Information Retrieval},

year={2020},

organization={ACM}

}